Choosing the right security testing tools is hard, because each type of tool has a different purpose with unique strengths. It can get confusing, but it’s a lot easier when you can sort them into different methodologies. And the process becomes almost simple once we properly understand the different impacts that each methodology will have.

I was recently working with Theresa Mammarella to prepare a presentation for Devnexus 2023, the largest Java conference in North America. She pulled me in to help create the material because of my background building and implementing application security testing tools, but neither of us anticipated where the coming weeks would take us.

As it turns out, there’s a ton of chatter online about what the best approach is for securing and validating security on your project. You probably wouldn’t be surprised to know that just about every article we found was created by a different vendor trying to hawk a specific product. That made things really difficult when we wanted to provide an honest guide to these tools, so I’ll try not to join that noise here with this article.

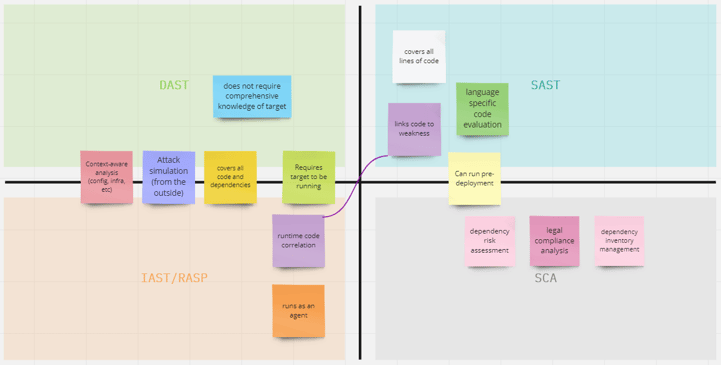

So we gathered all of the information we could find from a huge array of sources, and we began mapping the features that are common to each methodology.

Simply defining terms proved inadequate

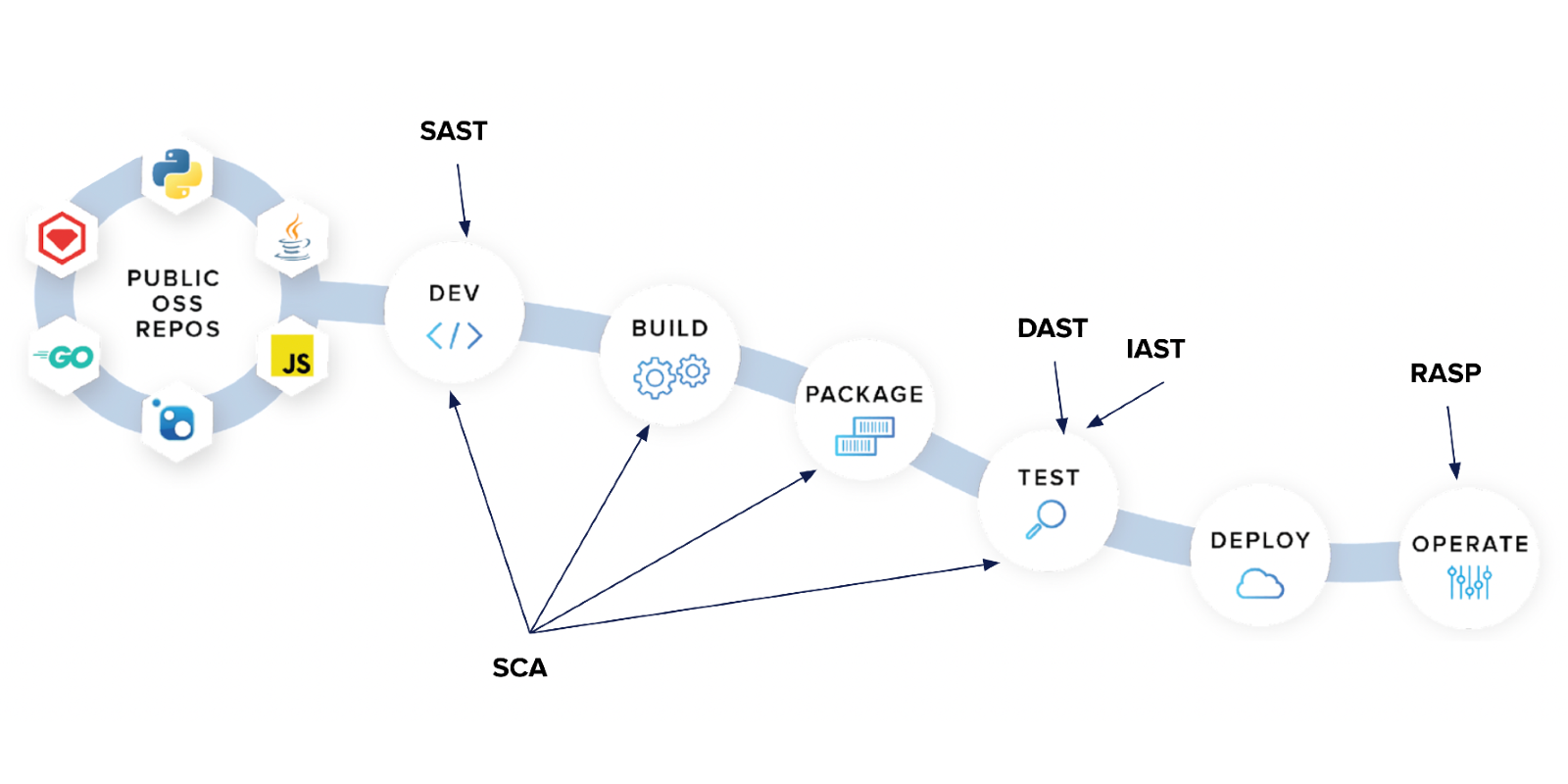

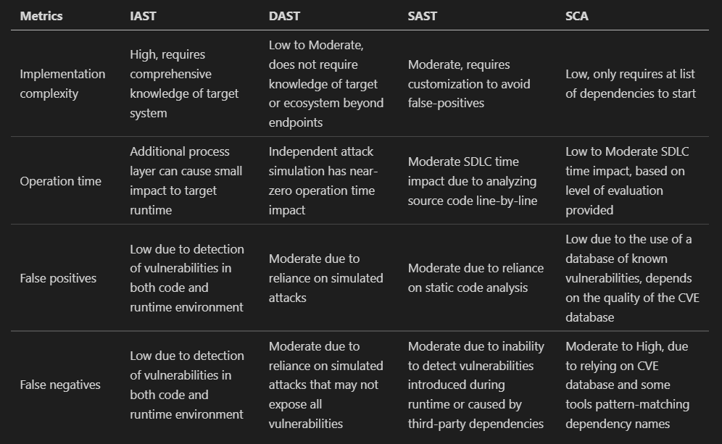

While we were writing our material, I started building a chart to organize our findings. You’ll note that we included SCA in our application security testing discussion, because we felt that SCA addresses the same core intent as the *AST approaches listed here (although it doesn’t do the same things).

Initially, I threw this table together just for myself to make sense of what I was reading, and it wasn’t quite as detailed at first. In fact, it wasn’t entirely accurate until after several renditions, because the information available on this topic is confusing. It’s confusing enough to confuse even someone like me, who has spent years working on DAST tools. I shared this with Theresa, and we refined it even further as we challenged each other’s understanding of the nuance.

Perhaps as you’re reading this, you find some nuance that we missed. Send me a tweet, and I’ll update this article if you have something notable that can be improved!

Comparing the methodologies was the true value

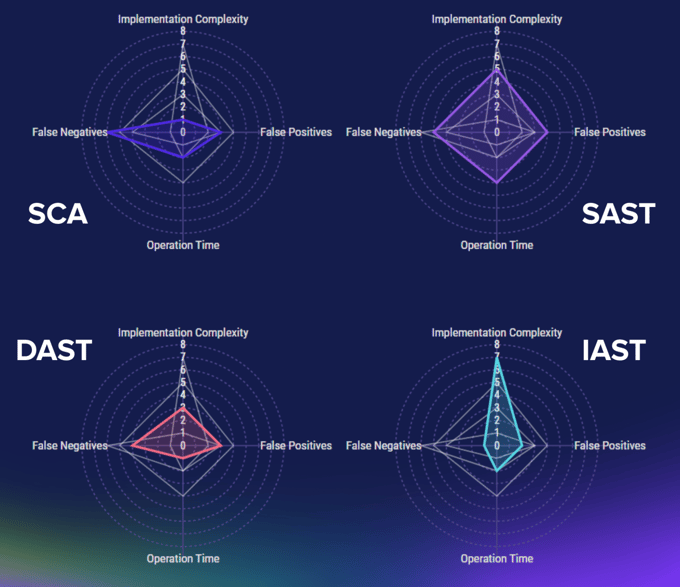

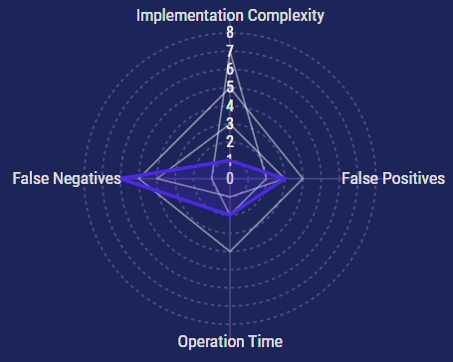

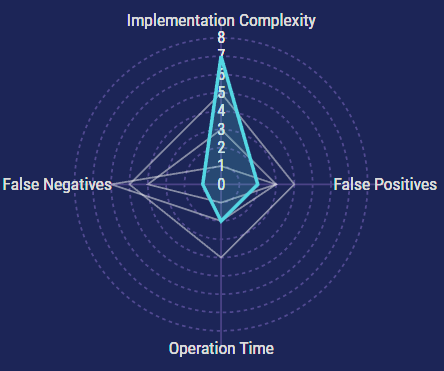

After we spent time refining our understanding, it occurred to me that this process was way more interesting than just explaining the technical bits of each methodology. I decided to start throwing numbers on each of the values in our table, and quickly pivoted to create a radar chart. It was at this point that I realized what we were really doing was measuring the impact of each methodology.

There are things that should be noted beyond the scope of just “how much space does it cover on the radar chart,” and we’ll get to that very soon. But for now, we can already get a peek into how much work will be necessary when using each methodology.

- Implementation complexity - Effort to set this up properly with the least falsey behavior

- Operation time - How much this slows down our normal operations

- False positives - How often things are flagged that shouldn’t be

- False negatives - How often things can get missed with this approach

In the case of Implementation Complexity, we raised the score for SAST and IAST in order to lower the falsey-ness scores. Less complex implementations will likely result in higher false positives or negatives.

But now we need to dig into what each of these tools actually is, so that we can better understand their impact. Let’s start with the “lowest level” tooling and move up from there.

Software composition analysis (SCA)

This is Theresa’s bread and butter because she works regularly with Sonatype Lifecycle product team. Lifecycle is one of many SCA tools, and it’s the most robust in the class, with AI-driven tooling backed by an army of security researchers working to ensure enterprises have the best control over their situation and the most secure experience when using open source tools.

And that last part is the key: SCA tooling will look at your imported dependencies, but it won’t be used to inspect your own codebase. This means that it covers a critical gap in the software development lifecycle (SDLC), but it falls short of covering the rest of our security requirements. We’ll need one of the *ASTs to fill in the rest.

Depending on the approach and intricacy of a particular tool, SCA will do some or all of the following:

- Inspect third-party code

- Identify known vulnerabilities

- Spot licensing concerns

- Detect malicious programs

- Find code tampering

- Highlight weak code patterns

The impact of SCA on your workflow is generally nominal, but the downside is that many SCA tools fall glaringly short of giving you a true picture. The more advanced your SCA tooling is, the less likely you will be to have false negatives. If you’re working on a critical application that handles sensitive data, it’s best to find an SCA tool that does all of the things listed above.

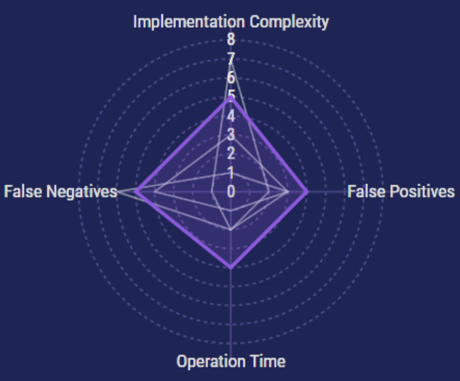

Static application security testing (SAST)

This one gets a hard time when you crawl the internet for information, but it has served a lot of us very well over the years. SAST really is handy, because it looks at all of our code— unlike SCA (though it doesn’t look at our dependencies, so the two are complementary).

When we run our SAST tooling in our dev processes, it will act like a big clever linter with an appetite for security risks. It’ll give us line-specific feedback early in the development process, saving time during code and security reviews later. If we’re also running DAST, IAST, or RASP, this stage of testing will still benefit us by catching a good portion of the mistakes earlier in the process.

Some folks aren’t happy about SAST looking at code that isn’t run, but I would suggest that we’re actually getting a lot of value by inspecting everything in our codebase to ensure that we aren’t leaving an easter egg for attackers to use in a daisy-chain attack later on.

On the flip side of my opinion, though, is the fact that SAST tends to find a lot of things to complain about. This leaves us with two rough scenarios: false positives and information overload.

False positives can be addressed through a good thorough implementation that effectively trains the SAST by configuring it to ignore the things that don’t matter to you. But there will still be a lot of results when you first turn it on, which can be a huge blocker for your engineers when they get hit with a massive to-do list. The recommended way to minimize the impact of that noise is to restrict the breadth of the implementation at first, and scale up as each phase of problems is addressed.

In addition to the risk of false positives is the inherent tolerance for false negatives. It’s inevitable that we can’t anticipate every application behavior until runtime, and SAST may simply miss some factors. Overall, SAST has the highest impact to our organization, but it still is a contender due to the balance between high specificity, early feedback, and moderately complex implementation requirements.

Dynamic application security testing (DAST)

This methodology is by far the least similar to the others on the list. DAST is your external operator who doesn’t vaguely care about the code you’re running, but absolutely cares about the results.

.png?width=461&height=386&name=Screen%20Shot%202023-04-24%20at%201.52.10%20PM%20(2).png)

Similar to IAST and RASP, this methodology involves testing a running application. That means it will test your application with awareness of the surrounding context. It is most commonly used to test development environments before they are deployed, but it may also be used for drift detection against production environments. In the latter example, the DAST would be able to catch negative impacts to the environment by running on a schedule and sending alerts accordingly.

Some people compare DAST to an automated penetration test, for good reason. Most DAST tools are simple boxes that you plug your values into and then let it run to see what it finds. Under the hood, the DAST will be trying to do everything that it expects an attacker to try. It’ll let you know what it was able to do… but because it’s operating from the outside, it won’t be able to tell you why it was able to–this concept will be important when we compare DAST to IAST later.

Some things that DAST might catch for you are:

- Input validation vulnerabilities, such as SQL Injection and XSS

- Authentication issues

- Configuration errors

- Weak ciphers

All that said, I feel the need to shout an opinion out here: DAST is weird if you can’t look at the code for the tests. I’ve been in situations where a test fails but all I know is that it was able to get from point A to point B. If I can read the code, I can know what it did to find that out, and thus how to replicate it. Furthermore, I’ve had tests that failed and I nearly swore that the test was wrong… until I looked at the code to understand that it had simply found a gap I hadn’t addressed.

As a final point, tools that fall into the DAST category generally need very little in the way of configuration to get started, and they aren’t going to be very falsey because they’re only telling you the things that are currently possible when running against your software.

Interactive application security testing (IAST)

Before we dive into IAST, remember that we’re discussing methodologies. IAST is the biggest one on here to really span a variety of different implementation possibilities— to the point where folks like us need an additional layer of language to describe the nuanced approaches.

IAST tools can be considered active or passive. When I tell you that one of these is more robust and holistic in its approach, you would be justified in assuming that I’m about to say active, but no… Passive IAST is a substantially more comprehensive testing approach. This is because Active IAST requires you to actively engage it and handle the responses, while Passive IAST is a little gremlin that you let loose into your system (although it’s not terrorizing anything except your vulnerabilities).

In both cases, IAST operates similarly to DAST and RASP in that it tests a running application. Also in both cases, IAST will have familiarity with your entire codebase and will follow requests from the outside (similar to DAST).

The place where Passive and Active IAST diverge is where and how they’re executed.

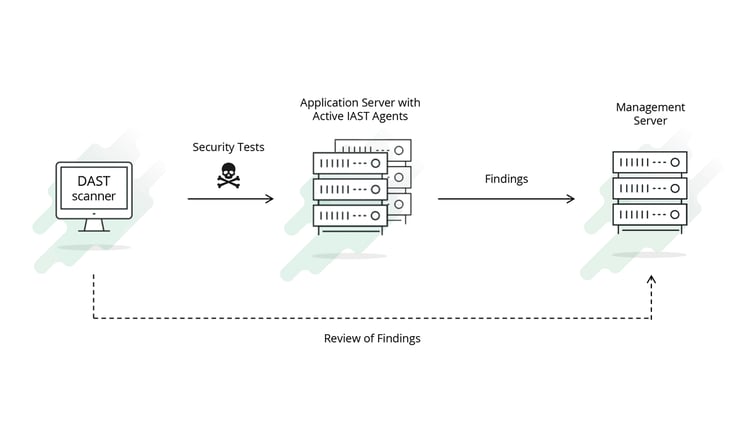

Active IAST

image credit: hdivsecurity.com/bornsecure/what-is-active-iast-and-passive-iast

image credit: hdivsecurity.com/bornsecure/what-is-active-iast-and-passive-iast

In the image above, we’re basically saying that Passive IAST is like DAST++. A controlled execution will run tests from the outside while logic inside your application will keep an eye on what’s happening as it happens. Think of it like breakpoints… the DAST-y tests will come into the application, and the IAST logic will track it through every line of the codebase. As soon as something weird happens, it will log the exact place in the code that allowed it, so you can review the findings to find your vulnerabilities.

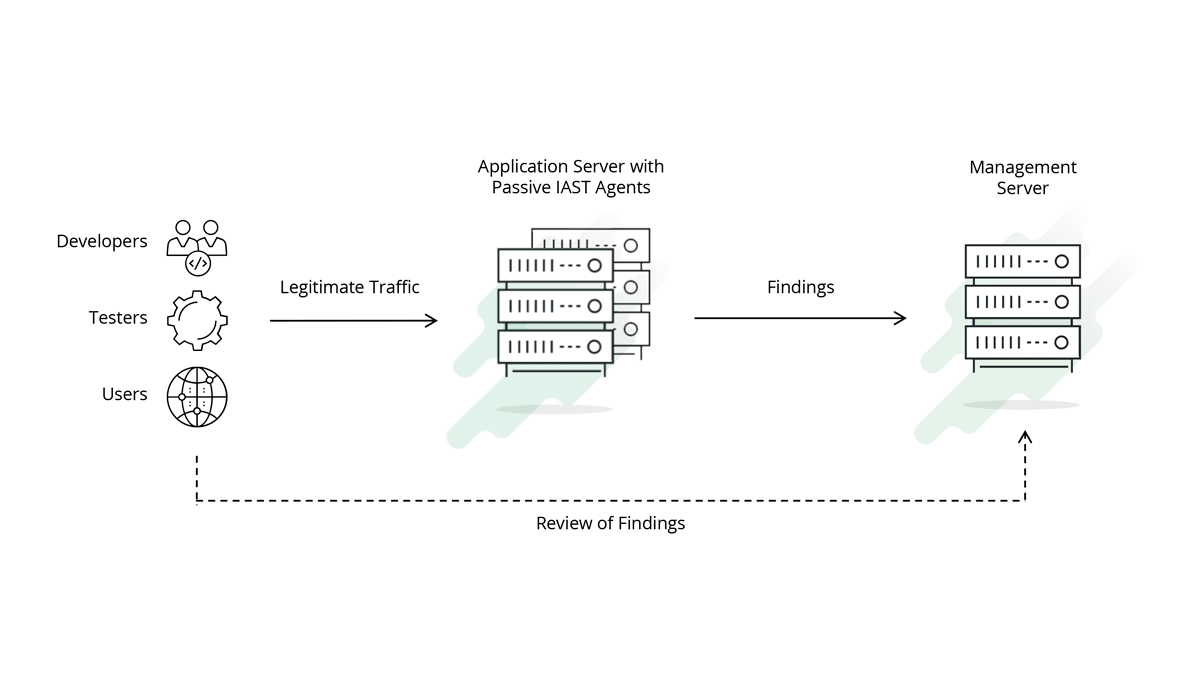

Passive IAST

image credit: hdivsecurity.com/bornsecure/what-is-active-iast-and-passive-iast

image credit: hdivsecurity.com/bornsecure/what-is-active-iast-and-passive-iast

Now in this image, we’re doing almost the same thing… except in a production environment. Instead of the IAST code having a DAST-y program sending in controlled tests, we’re inspecting literally every interaction that comes across the network into our application. The exact way that the agent behaves is different based on the tool, but if it’s Passive IAST then it’ll have something sitting there monitoring those findings and alerting accordingly.

Whether you’re looking at Passive or Active IAST, you’re going to need quite a bit of runway to get the tooling implemented. Complexity varies, as always, but it may go as far as to require line-by-line integrations to your codebase. But the upside is that it will have very specific results so that your developers can implement fixes substantially faster than DAST, and it’s not very falsey because you’ve methodically integrated it and customized it.

Honorable mention: Runtime application self protection (RASP)

This methodology is a fun one to talk about, but I won’t spend much time on it because most of the impact analysis is identical to Passive IAST. The key difference between RASP and Passive IAST is that Passive IAST alerts us while RASP protects us.

The RASP agent will have a high degree of control over our environment and network traffic (again, depending on the complexity of the tool and how you configure it). This allows it to watch traffic, note the result of different activities, and reject requests or correct any changes that were implemented by a vulnerability being exploited. Very cool.

Conclusion

This was a comparative impact analysis, so there’s no “What’s the best tool?” At the end of the day, there’s probably not even an answer to “What’s the best tool for me?” That’s because all of these tools have blind spots and inefficiencies. If any tool tries to do everything, it likely does one of those things poorly enough that it needs to have some compensation.

Similar to how a Security Operations Center may have SIEM, XDR, and EDR to cover every possible avenue, a development team may need to combine any of the above methodologies to ensure that no gap is left by a weakness in a single tool.

My only real advice is:

- Use at least one open source (or open core) *AST tool— that way your engineers can tear open the tests and get to the bottom of any weirdness that might arise.

- Make sure your SCA is appropriately suited to the size, complexity, and criticality of your environment. Open source dependencies are the open gate in your castle, so we can’t rely on our *AST to protect us once the attackers are inside.

I hope this helps you in your planning and self-reflection! I wish you the best of luck figuring out what combination of tooling is best for your situation!