Companies all over the place are trying to convert existing deployment scripts over to automated systems like Puppet and Chef. Many of the systems I've seen in the past few months have very complex codebases, builds that take 40 minutes to execute, and deployments that span hundreds of VM instances on public clouds like Amazon EC2 or private clouds using technologies like VMWare. Tools like Puppet and Chef are emerging as market leaders and the shift to large-scale automation is being driven by increasingly heterogeneous applications architectures and the arrival of open source "cloud APIs" such as Openstack.

In other words, everyone is scaling horizontally and everyone needs a repeatable, automated process to set up instances, deploy software, and perform tasks that were previously manual. Everyone seems to agree that the boundary between development and operations requires automation - it is time to stop wasting good operations and development talent on manual deployments. This trend is called Devops, and in this article I'm going to talk about where Nexus should fit into your automation effort.

DEVOPS: A Common Misconception

The first thing you'll notice in any devops initiative is confusion. Operations "people" often lack context and see Puppet or Chef as a green light to move into the development infrastructure space. This isn't just about taking a build and deploying it to production, ops believes that they are going to finally clean up that messy build system. For operations, the boundaries of devops often reach far into the application lifecycle: Builds, CI servers, Issue Trackers - it's often very unclear where you draw the line between deployment automation and development infrastructure. For example, some of the questions that come up as part of a devops initiative are telling. "Can we get the Maven build to generate RPMs instead of WARs?"

If the first question in a move to Puppet or Chef is operations asking, "How do we build the application from source?" You are doing it wrong. You need to use Nexus as a integration point between Development and Operations. Otherwise, it's get's awful, quickly. Operations and Development start to step on each other's toes and your devops initiative runs the risk of screwing up your development process. "We didn't make that deadline because we had to adapt the build to this new Puppet script."

Provide some structure to devops with a repository manager. Isolate operations from source code, and make sure that technologies like Puppet don't start affecting your builds.

Appropriate Boundaries in Devops

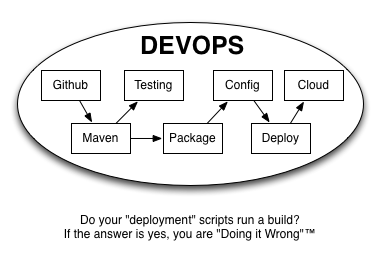

Does this look familiar? Does this capture your current approach to deployment automation (or what most people call "devops")? Do you run a build every time you have to do a deployment to production?

This is what I've seen in the field from over-zealous Puppet implementations. Instead of relying on build output from Jenkins, the initial cookbooks checkout source from Git, run a fairly complex build, and only then deal with deployment automation. From the perspective of development, this is wasted effort: tools like Jenkins, Hudson, and Bamboo already handle the generation of binary build output. Why go to the trouble of building something from source when the binaries are already available on Nexus? To automate builds once again in a Puppet script is duplication.

This approach also means that your operations team and the automation scripts they run have to take on the responsibility of setting up a development environment. If you start from source, your Puppet scripts have to manage dependencies, they have to know about source tags and branches, they have to incorporate much more complexity than they would need to if they just started with binary build output. If you change the build, you don't just have to worry about all of your developers. You'll have to trust that these Puppet scripts are building the same output that is being generated from Jenkins.

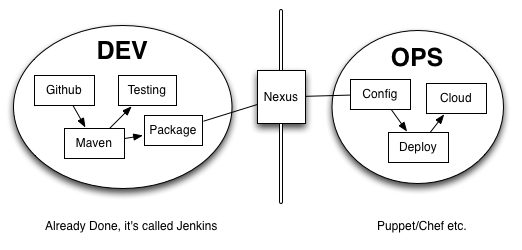

The following figure represents a more reasonable approach that separates the two concerns:

Jenkins is for Source, Puppet is for Deploys: Nexus stores the Bits in Between

Don't start devops from source. Puppet and Chef should gather binary build output from Nexus, the same way they install RPMs or DEBs from OS-level repositories. If you need to run a deployment to a production system in Puppet, you should just be able to tell your operations team to update the version number to the latest release. Puppet and/or chef should then refer to Nexus to gather this build output.

Jenkins (or Hudson or Bamboo) should continue to be the tool you use to run both continuous integration builds and release builds. When I configure Jenkins these days I usually have a 15 minute build that checks Git constantly alongside a release build that is triggered only when I'm ready to run the Maven release plugin. In this approach, I don't have to have a seperate "build system". Everything is consolidated into Jenkins, and if I want the Puppet people to deploy something I just trigger a release build wait a few minutes and send them a collection of version numbers.

This is much easier than the alternative of telling the Puppet team to "run a build", waiting for them to come back with a question about some broken build script, help diagnose that build script, realize that someone on the ops team "had a better idea" for some complier option, finally get some build output only to realize that they didn't use the right version of Maven on the Puppet VM image that ran the build, etc. Use Puppet for what it does best, and that isn't to trigger a build.

The Argument Goes Both Ways

Yes, I've seen mega-builds managed by Puppet. They are difficult to manage, and they end up starting too early in the process. When you extend tools way beyond what they focus on your systems tend to become very brittle.

On the other side of this spectrum, I've seen companies try to make Jenkins do everything that Puppet does and that's also a big problem. Jenkins wasn't designed to provide OS-level configuration management. Yes, you can use it for that. In this way, Jenkins is a bit dangerous because it can do everything, but that doesn't mean you should use it to automate production deployments. That's what Puppet and Chef do. Use the right tool for the job.

The lessons I've learned from being in several devops initiatives is that Devops is not about Developers and Administrators getting together to hold hands and value each other as co-equals in a collaborative, consensus-based process of harmony. It isn't. The ironic fact of this trend is that devops is about drawing strongly defined boundaries between these two concerns (Development and Operations) using tools like Jenkins, Nexus, and Puppet to create more structure than existed before.

If you are looking for some structure to your devops initiatives, check out Nexus. I've already seen it work very well as the collaboration point between dev and ops.